Europe approved the AI Act meant to protect us from the risks of AI

The new law introduces a risk-based regulatory system for AIs

2 min. read

Published on

Read the affiliate disclosure page to find out how can you help Windows Report effortlessly and without spending any money. Read more

AI is everywhere nowadays, especially since Microsoft forces Copilot onto us with every Windows update. Yet, while technology can help us work more efficiently, it is also responsible for sharing factual information.

On top of that, some of us put too much trust in AI models. As a result, they believe everything the AI generates without second-guessing it. So, the European Parliament approved the Artificial Intelligence Act (EU AI Act) to reduce the risks.

What is the EU AI Act 2024?

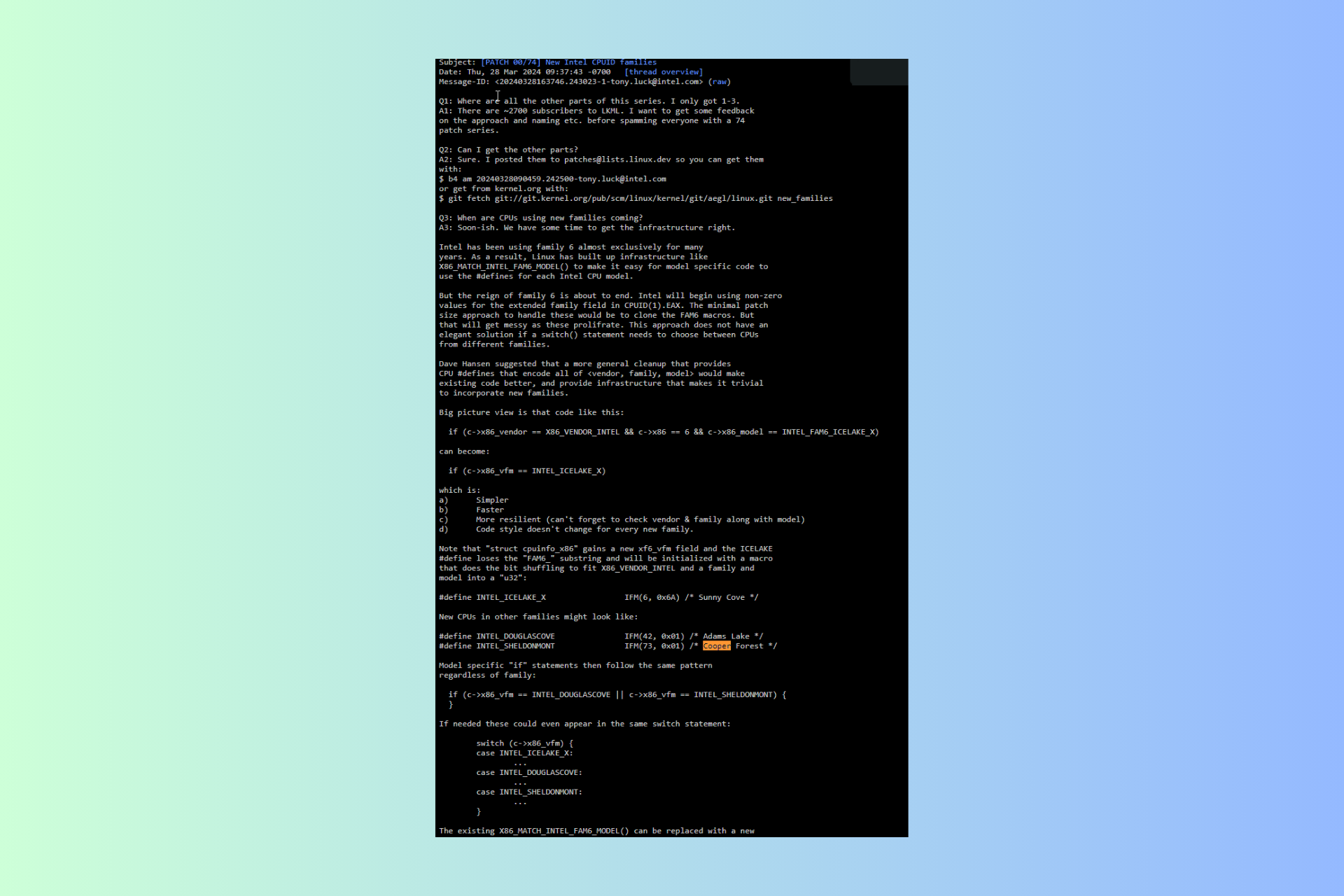

The new EU AI Act is similar to a rulebook for AI and its developers and provides specific requirements and obligations. For example, developers must ensure their products respect the law before publicly releasing them. In addition, the AI Act classifies AI models using a risk-based approach. According to the EU AI Act, there are four types of risks: minimal, limited, high, and unacceptable.

You can use minimal-risk AI freely. The law doesn’t restrict them because they perform actions such as upscaling graphics and filtering spam emails, similar to plugins and add-ons. However, that’s not the case for limited-risk AI. The new legal framework applies to them. Thus, chatbots need to tell users that they use artificially generated content and notify users that they are talking with a machine.

The EU AI Act considers various AI technologies part of the high-risk category. Some of the most commonly encountered are the ones for scoring exams, transport, evaluation, health care, and border control management. To reduce the chances of a dangerous outcome, developers have to assess them and provide detailed documentation on their system and usage.

Unlike the previous types, the AIs that fall under the unacceptable category, such as the ones used by China for social scoring, will be banned.

Ultimately, the EU AI Act protects us from the risk of using AI without thinking. Additionally, the framework aims to introduce transparency obligations for all AI models. Also, the legislation is future-proof. After all, it verifies the products and obliges developers to thoroughly asses them and their future updates. By the way, the laws could be implemented globally.

What are your thoughts? Is the EU AI Act too strict? Let us know in the comments.